Deploying Your Customized Caffe Models on Intel® Movidius™ Neural Compute Stick

Why do I need a custom model?

The Neural Compute Application Zoo (NCAppZoo) downloads and compiles a number of pre-trained deep neural networks such as GoogLeNet, AlexNet, SqueezeNet, MobileNets, and many more. Most of these networks are trained on ImageNet dataset, which has over a thousand classes (also called categories) of images. These example networks and applications make it easy for developers to evaluate the platform, and also build simple projects. If you plan on building a proof of concept (PoC) for an edge product, such as smart digital cameras, gesture controlled drones, or industrial smart cameras, you will probably need to customize your neural network.

Click here for a community contributed Chinese translation of this blog.

Let us suppose you are building a smart front door security camera. You won’t need a ‘zebra’, ‘armadillo’, ‘lionfish’, or many of the other thousand classes defined in ImageNet; instead you probably need just 15 to 20 classes such as ‘person’, ‘dog’, ‘mailman’, ‘person wearing hoody’, etc. By reducing your dataset from a thousand classes down to 20, you are also reducing the number of features that need to be extracted. This has a direct impact on your neural network’s complexity, which in turn impacts its size, training time, and inference time. In other words, by optimizing your neural network, you can achieve the following:

- Save time during network training, because you have a reduced dataset.

- This in turn saves money spent on keeping the training hardware up and running.

- This also helps speed up development time, so you can get to market faster.

- Reduce hardware BOM cost by minimizing the memory footprint of your model.

- The forward pass during inference would be faster because of the reduced complexity (i.e., the edge device can process camera frames much faster).

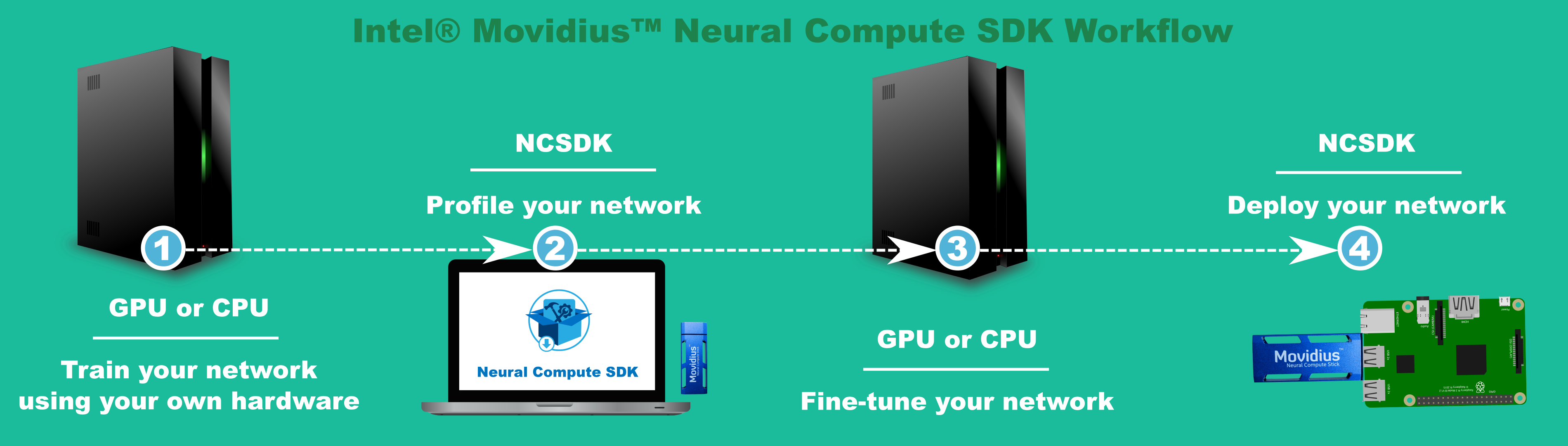

This article will walk through the process of training a pre-defined neural network with a custom dataset, profile it using Intel® Movidius™ Neural Compute SDK (NCSDK), modify the network to get better execution time, and finally deploy the customized model to the Intel® Movidius™ Neural Compute Stick (NCS).

Practical learning!

You will build…

A customized GoogLeNet deep neural network that can classify a dog vs. a cat.

You will learn…

- How to profile a neural network using NCSDK’s mvNCProfile tool.

You will need…

- An Intel Movidius Neural Compute Stick - Where to buy.

- A ‘training-ready’ hardware like Amazon® EC2, Intel® AI DevCloud, or a GPU-based system pre-installed with Caffe - Installation instructions.

- An x86_64 laptop/desktop pre-installed with NCSDK - Installation instructions.

Let’s build!

For the sake of simplicity, I have organized this article into four sections:

- Train - Neural network selection, dataset preparation, and training

- Profile - Analyze the neural network for bandwidth, complexity, and execution time

- Fine tune - Modify the neural network topology to gain better execution time

- Deploy - Deploy the customized neural network on an edge device powered by NCS

If your training hardware is not the same as the hardware on which NCSDK is installed, run sections 1 and 3 on your training hardware, and run sections 2 and 4 on the system where NCSDK is installed.

First, download the source code and helper scripts from NCAppZoo.

mkdir -p ~/workspace

cd ~/workspace

git clone https://github.com/ashwinvijayakumar/ncappzoo

git checkout dogsvscats1. Train

Neural network selection

Unless you are building a deep neural network from scratch, selecting the base neural network plays a critical role in the performance of your smart device. For example, if you are building a salmon species classifier, you can select a network topology that is simple enough to classify just a couple of classes (fewer features to extract), but it has to be fast enough to classify the fish swimming in rapid succession. On the other hand, if you are trying to build an inventory scanning robot for warehouse logistics, you may want to choose a network that sacrifices blazing speed classification in favor of being able to classify a large varity of inventory.

Once you have a good base network, you can always fine-tune it to strike a good balance between accuracy, execution time, and power consumption. GoogLeNet was designed for the ImageNet 2014 challenge, which had one thousand classes. It is clearly an overkill for an application that differentiates between dogs and cats, but we will use it to keep the tutorial simple, and also to clearly highlight the impact of customizing neural networks on accuracy and execution time.

Dataset preparation

- Download

test1.zipandtrain1.zipfrom Kaggle, into~/workspace/ncappzoo/apps/dogsvscats/data. - Dataset preparation steps are compiled into

Makefile. These steps include:- Image pre-processing - resizing, cropping, histogram equalization, etc.

- Shuffling the images

- Splitting the images into training and validation sets

- Creating an lmdb database of these images

- Computing image mean - a common deep learning technique used to normalize data

# The helper scripts use $CAFFE_PATH to navigate into your caffe installation directory.

export CAFFE_PATH=/PATH/TO/CAFFE/INSTALL_DIR

cd ~/workspace/ncappzoo/apps/dogsvscats

makeMake a note of the mean values displayed on the console, we will need it during inference.

If everything went well, you should see the following directory structure:

tree ~/workspace/ncappzoo/apps/dogsvscats/data

data

├── dogsvscats-mean.binaryproto

├── dogsvscats_train_lmdb

│ ├── data.mdb

│ └── lock.mdb

├── dogsvscats_val_lmdb

│ ├── data.mdb

│ └── lock.mdb

├── test1

│ ├── cat.92.jpg

│ ├── cat.245.jpg

│ └── ...

├── test1.zip

├── train

│ ├── cat.2388.jpg

│ ├── cat.465.jpg

│ └── ...

└── train.zipTraining

Given our small dataset (25,000 images), training from scratch wouldn’t take too long on a powerful hardware, but let’s do our part in conserving global energy by adopting transfer learning. Since GoogLeNet was trained on ImageNet dataset (which has images of cats and dogs), we can leverage the weights from a pre-trained GoogLeNet model.

Caffe makes it super easy for us to apply transfer learning by simply adding a --weights option to the training command. We would also have to change the training & solver prototxt (model definition) files depending on the type of transfer learning we adopt. In this example, we will choose the easiest, fixed feature extractor.

The dogsvscats project on github provides pre-modified prototxt files. To better understand the changes I have made, run a comparison (diff) between Caffe’s example network files and the ones in this project.

export CAFFE_PATH=/PATH/TO/CAFFE/INSTALL_DIR

cd ~/workspace/ncappzoo/apps/dogsvscats

diff -u $CAFFE_PATH/models/bvlc_googlenet bvlc_googlenet/orgTo initiate the training process, run the commands listed below. Depending on how powerful your training hardware is, you can either take a beer break or a nice long nap.

# The helper scripts use $CAFFE_PATH to navigate into your Caffe installation directory.

export CAFFE_PATH=/PATH/TO/CAFFE/INSTALL_DIR

cd ~/workspace/ncappzoo/apps/dogsvscats

# Download pre-trained GoogLeNet model

$CAFFE_PATH/scripts/download_model_binary.py $CAFFE_PATH/models/bvlc_googlenet

# Start training session

$CAFFE_PATH/build/tools/caffe train --solver bvlc_googlenet/org/solver.prototxt --weights $CAFFE_PATH/models/bvlc_googlenet/bvlc_googlenet.caffemodel 2>&1 | tee bvlc_googlenet/org/train.logIf everything went well, you should see a bunch of .caffemodel and .solverstate files in the dogsvscats/bvlc_googlenet/org directory.

During my test run, the model did not converge well, so I ended up training from scratch and got better results. If you see the same problem, just rerun the training session without the

--weightsoption. If you have any pointers on why my model didn’t converge, please let me know through the Intel Movidius developer forum.

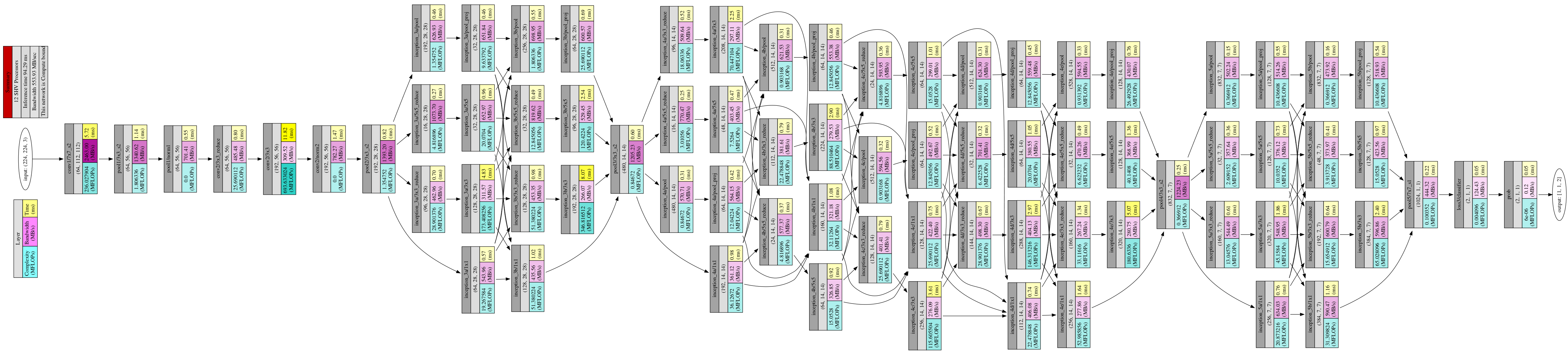

2. Profile

bvlc_googlenet_iter_xxxx.caffemodel is the weights file for the model we just trained. Let’s see if, and how well, it runs on the Neural Compute Stick. NCSDK ships with a neural network profiler tool called mvNCProfile, which is a very usefull tool when it comes to analyzing neural networks. The tool gives a layer-by-layer explanation of how well the neural network runs on the hardware.

Run the following commands on a system where NCSDK is installed, and ensure NCS is connected to the system:

cd ~/workspace/ncappzoo/apps/dogsvscats/bvlc_googlenet/org

mvNCProfile -s 12 deploy.prototxt -w bvlc_googlenet_iter_40000.caffemodel

ls -l graph

-rw-rw-r-- 1 ashwin ashwin 11972912 Dec 24 17:24 graphYou should see a console output of the bandwith, complexity, and execution time for each layer. You can access a GUI version of the same information on output.gv.svg and output_report.html.

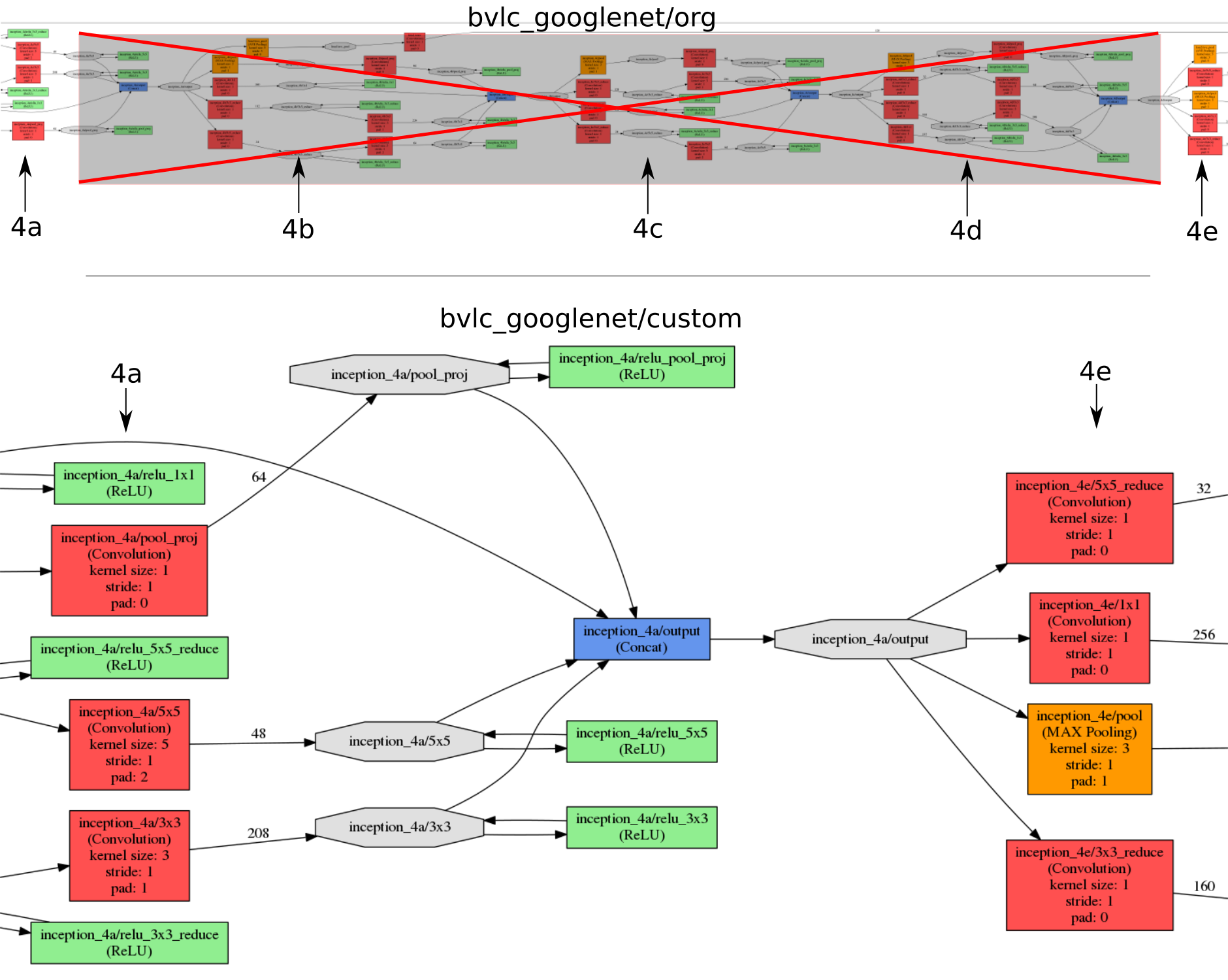

3. Fine tune

Notice how the Inception 4a, 4b, 4c, and 4d layers are the most complex layers, and they take quite a long time to do a forward pass. Theoretically, deleting these layers should give a 20-30 ms increase in performance, but what would happen to the accuracy? Let’s retrain with custom/deploy.prototxt.

Below is a pictorial representation of the changes I made to bvlc_googlenet/org/train_val.prototxt. I used CAFFE_PATH/python/draw_net.py to plot my networks. Netscope is another good online tool to plot Caffe-based networks.

cd ~/workspace/ncappzoo/apps/dogsvscats/bvlc_googlenet/org

python $CAFFE_PATH/python/draw_net.py train_val.prototxt train_val_plot.png

cd ~/workspace/ncappzoo/apps/dogsvscats/bvlc_googlenet/custom

python $CAFFE_PATH/python/draw_net.py train_val.prototxt train_val_plot.png# The helper scripts use $CAFFE_PATH to navigate into your caffe installation directory.

export CAFFE_PATH=/PATH/TO/CAFFE/INSTALL_DIR

cd ~/workspace/ncappzoo/apps/dogsvscats

# Start training session

$CAFFE_PATH/build/tools/caffe train --solver bvlc_googlenet/custom/solver.prototxt 2>&1 | tee bvlc_googlenet/custom/train.logNotice that I am not doing transfer learning (no

--weightsflag). Why, do you suppose? The weights from a pretrained network are tied to that specific network architecture. We made a drastic change to the original GoogLeNet architecture by deleting inception layers, so transfer learning might not yield good results.

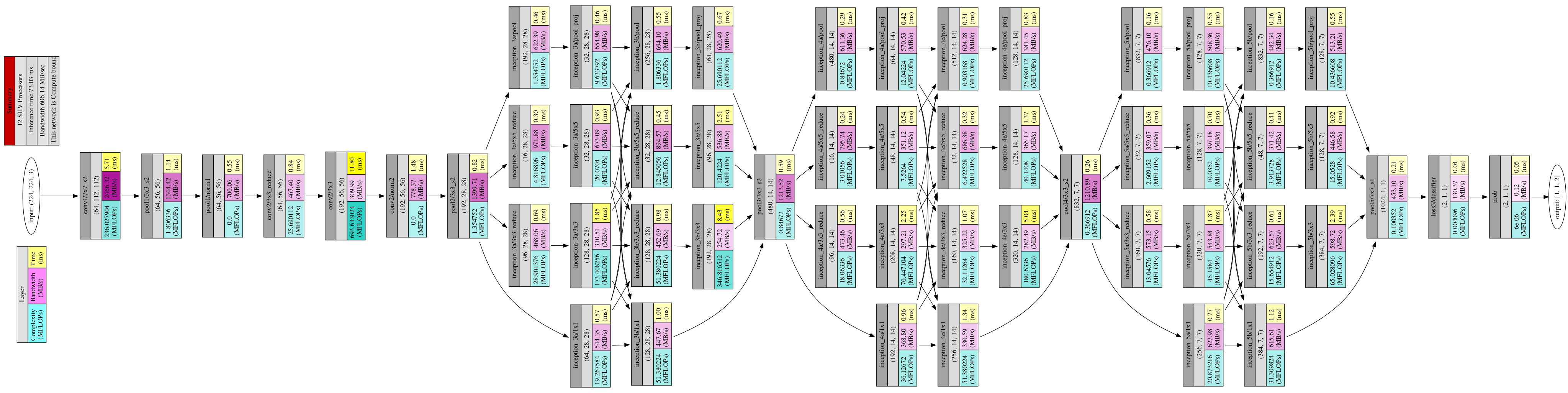

This training session will definitely be longer than a single coffee or beer break, so be patient. Once training is done, we can rerun mvNCProfile to analyze our custom neural network.

cd ~/workspace/ncappzoo/apps/dogsvscats/model

mvNCProfile -s 12 dogsvscats-custom-deploy.prototxt -w dogsvscats-custom.caffemodel

ls -l graph

-rw-rw-r-- 1 ashwin ashwin 8819152 Dec 24 17:26 graphLooks like our custom model is 21.26 ms faster and is 3 MB smaller when compared to the original GoogLeNet. But how does this affect the network’s accuracy? Let’s plot the learning curve for the training sessions before and after customization.

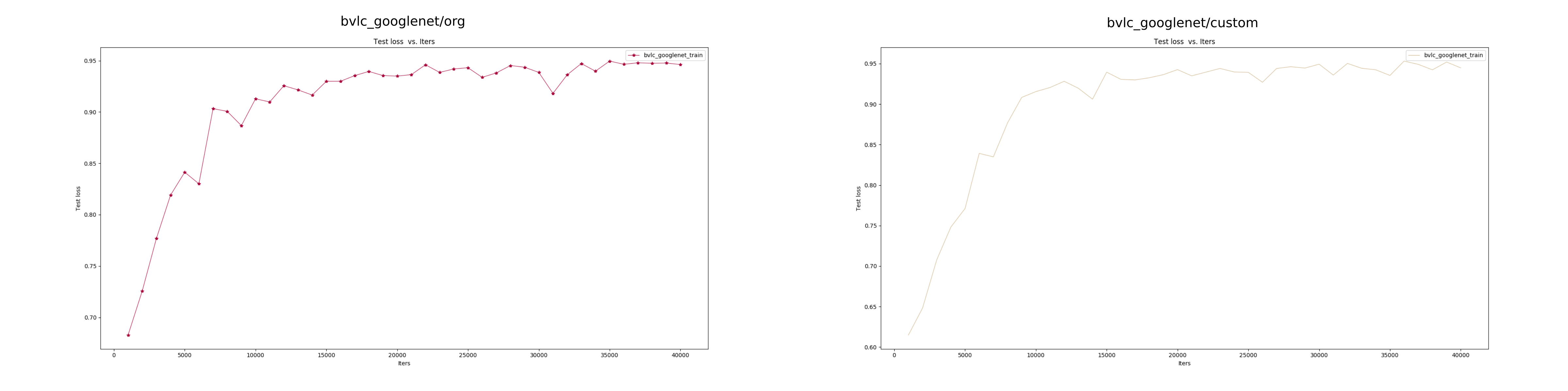

# The helper scripts use $CAFFE_PATH to navigate into your Caffe installation directory.

export CAFFE_PATH=/PATH/TO/CAFFE/INSTALL_DIR

cd ~/workspace/ncappzoo/apps/dogsvscats/bvlc_googlenet/org

# Plot learning curve

python $CAFFE_PATH/tools/extra/plot_training_log.py 2 bvlc_googlenet_train.png train.logPlot the custom network’s learning curve in another terminal.

# The helper scripts use $CAFFE_PATH to navigate into your Caffe installation directory.

export CAFFE_PATH=/PATH/TO/CAFFE/INSTALL_DIR

cd ~/workspace/ncappzoo/apps/dogsvscats/bvlc_googlenet/custom

# Plot learning curve

python $CAFFE_PATH/tools/extra/plot_training_log.py 2 bvlc_googlenet_train.png train.logThe graph’s labels might be a little misleading, because you would expect the “Test Loss” to go down over iterations; however, it’s going up. The graph is actually plotting

loss3/top-1, which is your network’s accaracy. See theloss3/top-1layer definition intrain_val.prototxtfor more details.

When I ran the training sessions, there was very little difference between the accuracy of the two networks. I believe this is because of the small number of classes, i.e. fewer features. A larger number of classes would probably show some noticable difference in accuracy.

4. Deploy

Now that we are satisfied with the performance of our neural network, we can deploy it on an edge device like a Raspberry Pi or a MinnowBoard. Let’s use image-classifier to load the graph and perform inference on a specific image. We would have to make some changes to the original code so that we are applying the right mean and scaling factor, and are pointing the code to the right graph and test image.

--- a/apps/image-classifier/image-classifier.py

+++ b/apps/image-classifier/image-classifier.py

@@ -16,10 +16,10 @@ import sys

# User modifiable input parameters

NCAPPZOO_PATH = '../..'

-GRAPH_PATH = NCAPPZOO_PATH + '/caffe/GoogLeNet/graph'

-IMAGE_PATH = NCAPPZOO_PATH + '/data/images/cat.jpg'

-CATEGORIES_PATH = NCAPPZOO_PATH + '/data/ilsvrc12/synset_words.txt'

-IMAGE_MEAN = numpy.float16( [104.00698793, 116.66876762, 122.67891434] )

+GRAPH_PATH = NCAPPZOO_PATH + '/apps/dogsvscats/bvlc_googlenet/custom/graph'

+IMAGE_PATH = NCAPPZOO_PATH + '/apps/dogsvscats/data/test1/173.jpg'

+CATEGORIES_PATH = NCAPPZOO_PATH + '/apps/dogsvscats/data/categories.txt'

+IMAGE_MEAN = numpy.float16( [106.202, 115.912, 124.449] )

IMAGE_STDDEV = ( 1 )

IMAGE_DIM = ( 224, 224 )

@@ -77,7 +77,7 @@ order = output.argsort()[::-1][:6]

# Get execution time

inference_time = graph.GetGraphOption( mvnc.GraphOption.TIME_TAKEN )

-for i in range( 0, 4 ):

+for i in range( 0, 2 ):

print( "Prediction for "

+ ": " + categories[order[i]]

+ " with %3.1f%% confidence"Run image-classifier after you have made the above changes:

cd ~/workspace/ncappzoo/apps/image-classifier

python3 image-classifier.py

------- predictions --------

Prediction for : Dog with 98.4% confidence in 90.46 ms

Prediction for : Cat with 1.7% confidence in 90.46 ms

Another useful tool to test your image classifier is rapid-image-classifier, which reads all the images in a folder (and sub-folder) and prints out the inference results. Do the same changes we did for image-classifier above, and run the app.

cd ~/workspace/ncappzoo/apps/rapid-image-classifier

python3 rapid-image-classifier.py

Pre-processing images...

Prediction for 6325.jpg: Cat with 100.0% confidence in 90.00 ms

Prediction for 7204.jpg: Cat with 100.0% confidence in 73.67 ms

Prediction for 3384.jpg: Dog with 100.0% confidence in 73.72 ms

Prediction for 5487.jpg: Dog with 100.0% confidence in 73.27 ms

Prediction for 1185.jpg: Cat with 100.0% confidence in 73.60 ms

Prediction for 3845.jpg: Dog with 68.5% confidence in 73.29 ms

...

...Congratulations! You just deployed a custom Caffe-based deep neural network on an edge device.

Notice the ~20 ms difference between the first and second inference? This is because the first call to

loadTensorafter opening the device and loading the graph takes more time than consecutive calls.

In the above image-classifier and rapid-image-classifier example, we used the default graph file created by mvNCProfile, but you can choose to generate a graph file with custom name and use that in your app instead.

cd ~/workspace/ncappzoo/apps/dogsvscats/bvlc_googlenet/org

mvNCCompile -s 12 deploy.prototxt -w bvlc_googlenet_iter_40000.caffemodel -o dogsvscats-org.graphFurther experiments

- Apply data augmentation techniques to improve your model’s accuracy.

- GoogLeNet (even our customized version) is probably an overkill for dogvscats classification; try a simpler network like VGG 16, Resnet-18 or LeNet.

- You can find a list of validated networks on NCSDK’s release notes.

Further reading

- Here’s a good article on how to improve your model’s accuracy by minimizing underfitting and overfitting.

- Andrej Karpathy did an excellent job of explaining transfer learning in his CS231 notes. Pay special attention to the ‘Constraints from pretrained models’ section.

- Detailed documentation on mvNCProfile tool.