Using and Understanding the Neural Compute SDK: mvNCCheck

Neural Compute SDK Toolkit: mvNCCheck

The Intel® Movidius™ Neural Compute Software Development Kit (NCSDK) comes with three tools that are designed to help users get up and running with their Intel® Movidius™ Neural Compute Stick (Intel® Movidius™ NCS): mvNCCheck, mvNCCompile, and mvNCProfile. In this article, we will aim to provide a better understanding of how the mvNCCheck tool works and how it fits into the overall workflow of the Neural Compute SDK.

Fast track: Let’s check a network using mvNCCheck!

You will learn…

- How to use the mvNCCheck tool

- How to interpret the output from mvNCCheck

You will need…

- An Intel Movidius Neural Compute Stick - Where to buy

- An x86_64 laptop/desktop running Ubuntu 16.04

If you haven’t already done so, install NCSDK on your development machine. Refer to the Intel Movidius NCS Quick Start Guide for installation instructions.

Checking a network

Step 1 - Open a terminal and navigate to ncsdk/examples/caffe/GoogLeNet

Step 2 - Let’s use mvNCCheck to validate the network on the Intel Movidius NCS

mvNCCheck deploy.prototxt -w bvlc_googlenet.caffemodelStep 3 - You’re done! You should see a screen similar to the one below:

USB: Myriad Connection Closing.

USB: Myriad Connection Closed.

Result: (1000,)

1) 885 0.3015

2) 911 0.05157

3) 904 0.04227

4) 700 0.03424

5) 794 0.03265

Expected: (1000,)

1) 885 0.3015

2) 911 0.0518

3) 904 0.0417

4) 700 0.03415

5) 794 0.0325

------------------------------------------------------------

Obtained values

------------------------------------------------------------

Obtained Min Pixel Accuracy: 0.1923076924867928% (max allowed=2%), Pass

Obtained Average Pixel Accuracy: 0.004342026295489632% (max allowed=1%), Pass

Obtained Percentage of wrong values: 0.0% (max allowed=0%), Pass

Obtained Pixel-wise L2 error: 0.010001560141939479% (max allowed=1%), Pass

Obtained Global Sum Difference: 0.013091802597045898

------------------------------------------------------------What does mvNCCheck do and why do we need it?

As part of the NCSDK, mvNCCheck serves three main purposes:

- Ensure accuracy when the data is converted from fp32 to fp16

- Quickly find out if a network is compatible with the Intel NCS

- Quickly debug the network layer by layer

Ensuring accurate results

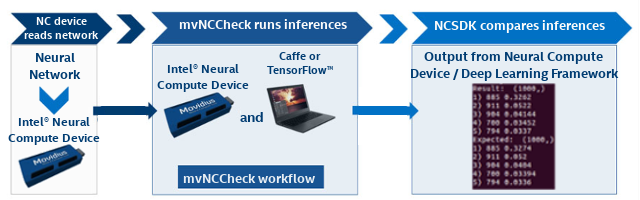

To ensure accurate results, mvNCCheck compares inference results between the Intel Movidius NCS and the network’s native framework (Caffe/TensorFlow™). Since the Intel Movidius NCS and NCSDK use 16-bit floating point data, it must convert the incoming 32-bit floating point data to 16-bit floats. The conversion from fp32 to fp16 can cause minor rounding issues to occur in the inference results, and this is where the mvNCCheck tool can come in handy. The mvNCCheck tool can check if your network is producing accurate results.

First the mvNCCheck tool reads in the network and converts the model to Intel Movidius NCS format. It then runs an inference through the network on the Intel Movidius NCS, and it also runs an inference with the network’s native framework (Caffe/TensorFlow).

Finally the mvNCCheck tool displays a brief report that compares inference results from the Intel Movidius NCS and from the native framework. These results can be used to confirm that a neural network is producing accurate results after the fp32 to fp16 conversion on the Intel Movidius NCS. Further details on the comparison results will be discussed below.

Determine network compatibility with Intel Movidius NCS

mvNCCheck can also be used as a tool to simply check if a network is compatible with the Intel Movidius NCS. There are a number of limitations that could cause a network to not be compatible with the Intel Movidius NCS including, but not limited to, memory constraints, layers not being supported, or unsupported neural network architectures. For more information on limitations, please visit the Intel Movidius NCS documentation website for TensorFlow and Caffe frameworks. Additionally you can view the latest NCSDK Release Notes for more information on errata and new release features for the SDK.

Debugging networks with mvNCCheck

If your network isn’t working as expected, mvNCCheck can be used to debug your network. This can be done by running mvNCCheck with the -in and -on options.

- The -in option allows you to specify a node as the input node

- The -on option allows you to specify a node as the output node

Using the -in and -on arguments with mvNCCheck, it is possible to pinpoint which layer the error/discrepencies could be originating from by comparing the Intel NCS results with the Caffe/TensorFlow in a layer-by-layer or a binary search analysis.

Debugging example:

Let’s assume your network architecture is as follows:

- Input - Data

- conv1 - Convolution Layer

- pooling1 - Pooling Layer

- conv2 - Convolution Layer

- pooling2 - Pooling Layer

- Softmax - Softmax

Let’s pretend you are getting nan (not a number) results when running mvNCCheck. You can use the -on option to check the output of the first Convolution layer “conv1” by running the following command mvNCCheck user_network -w user_weights -in input -on conv1. With a large network, using a binary search method would help to reduce the time needed to find the layer where the issue is originating from.

Understanding the output of mvNCCheck

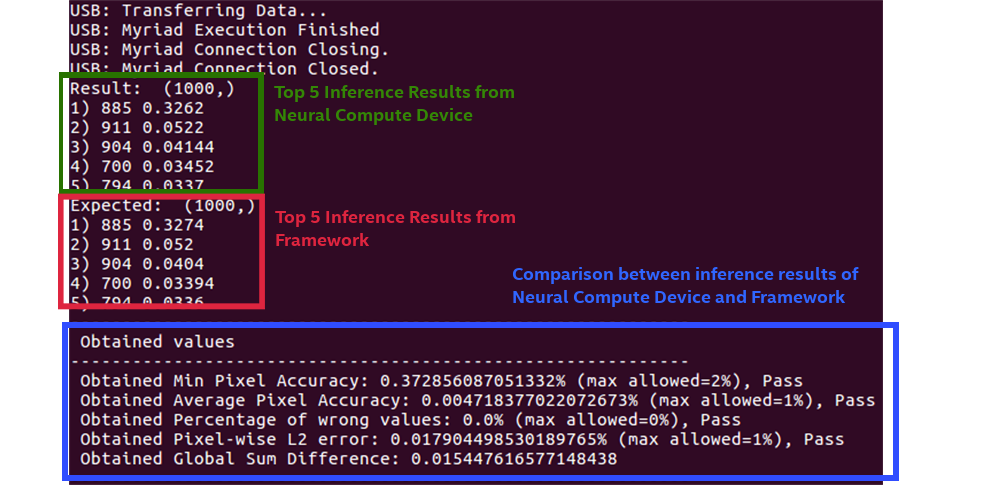

Let’s examine the output of mvNCCheck above.

- The results in the green box are the top five Intel NCS inference results

- The results in the red box are the top five framework results from either Caffe or TensorFlow

- The comparison output (shown in blue) shows various comparisons between the two inference results

To understand these results in more detail, we have to understand that the output from the Intel Movidius NCS and the Caffe/TensorFlow are each stored in a tensor (a more simplified definition of a tensor is an array of values). Each of the five comparison tests is a mathematical comparison between the two tensors.

Legend:

- ACTUAL – the tensor output by the Neural Compute Stick

- EXPECTED– the tensor output by the framework (Caffe or TensorFlow)

- Abs – calculate the absolute value

- Max – Find the maximum value from a tensor(s)

- Sqrt – Find the square root of a value

- Sum – Find the sum of a value

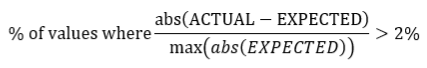

Min Pixel Accuracy:

This value represents the largest difference between the two output tensors’ values.

![]()

Average Pixel Accuracy:

This is the average difference between the two tensors’ values.

![]()

Percentage of wrong values:

This value represents the percentage of Intel Movidius NCS tensor values that differ more than 2 percent from the framework tensor.

- Why the 2% threshold? The 2 percent threshold comes from the expected impact of reducing the precision from fp32 to fp16.

Pixel-wise L2 error:

This value is a rough relative error of the entire output tensor.

![]()

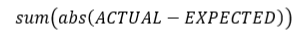

Sum Difference:

The sum of all of the differences between the Intel Movidius NCS tensor and the framework tensor.

How did mvNCCheck run an inference without an input?

When making a forward pass through a neural network, it is common to supply a tensor or array of numerical values as input. If no input is specified, mvNCCheck uses an input tensor of random float values ranging from -1 to 1. It is also possible to specify an image input with mvNCCheck by using the “-i” argument followed by the path of the image file.

Examining a Failed Case

If you run mvNCCheck and your network fails, it can be one of the following reasons.

Input Scaling

Some neural networks expect the input values to be scaled. If the inputs are not scaled, this can result in the Intel Movidius NCS inference results differing from the framework inference results.

When using mvNCCheck, you can use the –S option to specify the divisor used to scale the input. Images are commonly stored with values from each color channel in the range of 0-255. If a neural network expects a value from 0.0 to 1.0 then using the –S 255 option will divide all input values by 255 and scale the inputs accordingly from 0.0 to 1.0.

The –M option can be used for subtracting the mean from the input. For example, if a neural network expects input values ranging from -1 to 1, you can use the –S 128 and –M 128 options together to scale the network from -1 to 1.

Unsupported layers

Not all neural network architectures and layers are supported by the Intel Movidius NCS. If you receive an error message saying “Stage Details Not Supported” after running mvNCCheck, there may be a chance that the network you have chosen requires operations or layers that are not yet supported by the Neural Compute SDK. For a list of all supported layers, please visit the Neural Compute Caffe Support and Neural Compute TensorFlow Support documentation sites.

Bugs

Another possible cause of incorrect results are bugs. Please report all bugs to the Intel Movidius Neural Compute Developer Forum.

More mvNCCheck options

For a complete listing of the mvNCCheck arguments, please visit the mvNCCheck documentation website.

Further reading

- Understand the entire development workflow for Intel Movidius NCS.

- Here’s a good write-up on network configuration, which includes mean subtraction and scaling topics.