Introduction

The Intel® Movidius™ Neural Compute SDK (Intel® Movidius™ NCSDK) enables rapid prototyping and deployment of deep neural networks (DNNs) on compatible neural compute devices like the Intel® Movidius™ Neural Compute Stick. The NCSDK includes a set of software tools to compile, profile, and check (validate) DNNs as well as the Intel® Movidius™ Neural Compute API (Intel® Movidius™ NCAPI) for application development in C/C++ or Python.

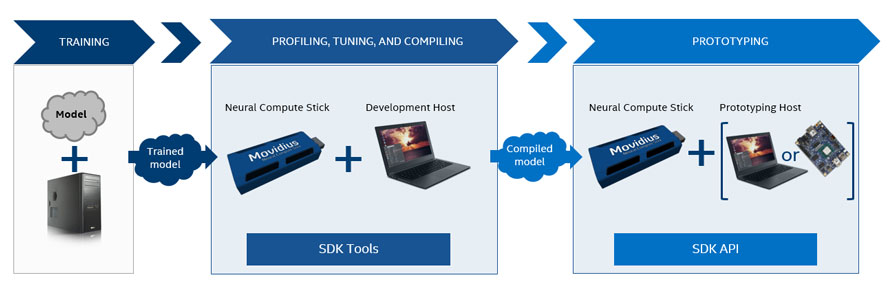

The NCSDK has two general usages:

- Profiling, tuning, and compiling a DNN model on a development computer (host system) with the tools provided in the NCSDK.

- Prototyping a user application on a development computer (host system), which accesses the neural compute device hardware to accelerate DNN inferences using the NCAPI.

The following diagram shows the typical workflow for development with the NCSDK:

Note that network training phase does not utilize the NCSDK.

NCSDK2

NCSDK 2.x introduces several new features:

- NCAPI v2 - a more flexible API that supports multiple graphs on the same device, queued input and output, and 32FP data types. Note: NCAPI v2 is not backward compatible with NCAPI v1.

- New installation options:

Documentation Overview

Installation

Instructions for basic installation of the NCSDK and NCAPI as well as instructions for installation with virtualenv or in a Docker container or virtual machine.

Frameworks

Instructions for compiling Caffe or TensorFlow* networks for use with the NCSDK.

Toolkit

Documentation for the tools included with the NCSDK - mvNCCheck, mvNCCompile, and mvNCProfile.

API

Documentation for the NCAPI.

Examples

An overview of examples included with the NCSDK. Additional examples can be found on our Neural Compute App Zoo GitHub repository.

Release Notes

The latest NCSDK release notes.

Support

Troubleshooting and support information.